GigRadar watches job marketplaces in real time, scores each post for fit, drafts tailored openers you can send in minutes, and closes the loop with performance data. Under the hood you’ll find four big systems: ingest & normalize, rank & route, compose & assist, and measure & learn. This article breaks down each piece so agencies can evaluate whether gigradar (and tools like it) match their workflow—and how to get the most from gigradar job alerts, the gigradar ai bidder, and gigradar analytics.

What problem is GigRadar actually solving?

Winning work on marketplaces is a speed-and-signal game. Every hour, hundreds of posts appear; many are a poor fit, a few are perfect. Humans are excellent at judgment, but terrible at 24/7 scanning and context switching. Tools like gigradar automate the drudgework: finding, filtering, prioritizing, and giving you a credible first draft—without removing the human decision. The result is more proposals to the right opportunities, sent faster and with higher consistency.

Teams that adopted this loop early have already seen how it compounds — one data-analytics agency even turned their Upwork channel profitable in just a few weeks.

System Overview: The Four Engines

Think of GigRadar as four cooperating engines behind a clean UI:

- Ingest & Normalize — Collects new postings across sources; standardizes titles, bodies, budgets, and metadata.

- Rank & Route — Scores every job against your lanes, past wins, and constraints; triggers gigradar job alerts in real time or batched digests.

- Compose & Assist — The gigradar ai bidder drafts short, specific openers with a tiny milestone and acceptance criteria; you review and send.

- Measure & Learn — gigradar analytics tracks reply, shortlist, and win rates by lane, budget tier, sender, and timing; the model adapts your ranking and copy suggestions.

Let’s open the hood on each.

1) Ingest & Normalize: Turning noisy posts into structured data

Sources & polling. The ingest layer listens for new posts via marketplace feeds, APIs, or watchlists. It prioritizes freshness (think seconds-to-minutes) and de-duplicates similar listings across overlapping channels.

Normalization. Raw posts vary wildly. The pipeline:

- Language detection & translation, so non-English posts still score correctly.

- Entity extraction (skills, frameworks, CMS, verticals, regions) using a domain-tuned NER model.

- Budget unification (hourly vs fixed; local currency → your base currency).

- Quality heuristics (length, specificity, payment verified, proposal count).

- Safety & policy checks (flags for off-platform requests, sensitive data).

By the time you see a card, GigRadar’s already converted a messy paragraph into a structured record your ranking model can reason about.

2) Rank & Route: How the model knows which jobs are “yours”

Your fingerprint (the “ICP”). During setup, you define lanes (e.g., Shopify CRO, React dashboards, B2B SEO) with minimum budgets, time-zone overlap, and forbidden keywords. You import artifacts (Looms, case notes) and optionally connect past wins/losses. That becomes your “ideal client profile” map.

Scoring features. The ranker blends:

- Semantic similarity between the post and your lane examples (vector embeddings).

- Constraint satisfaction (budget floors/ceilings, category, region).

- Freshness multiplier (you’ll see “<5 proposals” jobs sooner).

- Trust signals (payment verified, prior hires, tone heuristics).

- Historical lift (what you historically win after controlling for price and timing).

Priority buckets. Each new job lands in one of three buckets:

- P1 (act now): Near-perfect fit and fresh; triggers a push notification and a top-of-inbox gigradar job alert.

- P2 (batch): Good fit but less urgent; appears in your next digest.

- Archive: Off-lane or fails policy checks.

You can tune aggressiveness (how many P1s you want per day) so you never drown in alerts.

3) Compose & Assist: What the gigradar AI bidder actually writes

The gigradar ai bidder isn’t a “send it blindly” robot. It’s a drafting assistant built around a modern, phone-length cover-letter pattern that’s proven to lift replies:

- Two specifics from the post to prove you read it.

- A tiny milestone with clear acceptance criteria (Done = …) in the client’s language.

- One proof artifact (Loom, before/after screenshot, or spec snippet).

- Logistics (time-zone overlap, stack fluency).

- Choice-based CTA (“10-minute call or I can send a 2-slide plan—your pick”).

Under the hood. The system:

- Summarizes the post into goals, constraints, and risks.

- Suggests a micro-milestone mapped to your lane library (e.g., “CWV Fix Pack,” “Decisionable Dashboard v1”), then paraphrases acceptance criteria in the client’s words.

- Selects one artifact from your vault that best matches the post’s stack/vertical.

- Drafts an opener in 150–220 words, with variables filled from the post and your profile.

You can force “proof-first,” “plan-first,” or “question-led” modes, and you always review before sending—human-in-the-loop by design.

4) Measure & Learn: Closing the loop with gigradar analytics

If you can’t see improvement, you won’t trust the tool. gigradar analytics emphasizes a handful of actionable metrics:

- Reply rate (replies ÷ proposals) and time-to-first-reply.

- Shortlist (interview) rate and win rate (funded ÷ proposals).

- RPP (revenue per proposal) — the compounding metric agencies care about.

- Speed-to-lead (minutes from post to send) and post age at send.

- Variant testing (A/B of opener style, CTA, proof type).

Dashboards segment by lane, budget tier, sender, and priority bucket (P1/P2), then recommend tweaks: tighten a negative keyword, swap the opener to proof-first for a particular lane, or increase your real-time window during certain hours.

Real-Time vs Digest Alerts: Why both modes exist

gigradar job alerts supports two rhythms:

- Real-time (push): For lanes where being early matters (bug fixes, migrations, quick design updates). You process these in short “bid sprints” (15–30 minutes, 2–3×/day).

- Daily digests: For consulted, research-heavy work (strategy, data, content). You review calmly, write stronger messages, and avoid context-switching.

Most teams run a hybrid: P1 lanes on real-time, P2 lanes on digests. Analytics reveals where speed lifts replies versus where depth lifts wins.

Safety, Compliance, and Platform-Friendly Design

No one wants to get rate-limited or flagged. A platform-friendly tool:

- Never auto-submits without your explicit action.

- Respects marketplace policies (no off-platform solicitations, no scraping private data, no fake personas).

- Keeps an audit trail of who sent what, when, and why (features → decision → draft → final).

- Supports NDAs and SOW/IP language in a lightweight way so your first milestone is easy to approve.

Your brand is your moat; gigradar treats automation as assistive, not autonomous.

Example: From Job Post to Draft in 90 Seconds

- Ping: A P1 gigradar job alert arrives—“Shopify PDP performance; payment verified; <$5k–$10k>.”

- Card view: You see extracted entities (Theme 2.0, mobile LCP, CLS issues), budget, proposal count (“<5”), and confidence score.

- Draft: Click “Compose”—the gigradar ai bidder pulls your “CWV Fix Pack” template and fills:

- Two specifics from the post.

- Micro-milestone: “LCP < 2.8s & CLS < 0.1 on PDP mobile,” deliverables, and a 3–5 day estimate.

- Proof: your 80-sec Loom of a similar PDP fix.

- CTA: “10-minute call or 2-slide plan.”

- Two specifics from the post.

- Edit & send: You tweak one phrase, hit send, and the thread enters analytics with variant tags to learn from outcomes.

That’s the loop—fast, human, and measurable.

What does “learning” look like in practice?

Copy learning. If proof-first openers outperform plan-first by 20% in “Shopify <$5k,” the model will recommend proof-first as the default for that lane. If binary CTAs (“Option A or B?”) win more interviews in B2B content, you’ll see that too.

Ranking learning. If you keep declining posts that contain “speed optimization on blog” because they underpay, the negative keyword list for that lane updates and your P1 threshold tightens.

Timing learning. If your reply rate jumps when you send within 45 minutes of posting in CET mornings, the system will gently nudge you to prioritize those windows.

This is where gigradar analytics earns its keep: fewer guesses, more feedback loops.

Benchmarks to Aim For (Agency Context)

Every market is different, but focused agencies commonly target:

- Reply: 25–35% (stretch 35–45%)

- Shortlist: 12–22% (stretch 18–30%)

- Win: 6–12% (stretch 10–20%)

- Median speed-to-lead: ≤60 minutes on P1 fits

Hitting these isn’t just an AI feat; it’s clean inputs, well-named micro-milestones, and a strict “one artifact” rule—areas where gigradar helps you stay consistent.

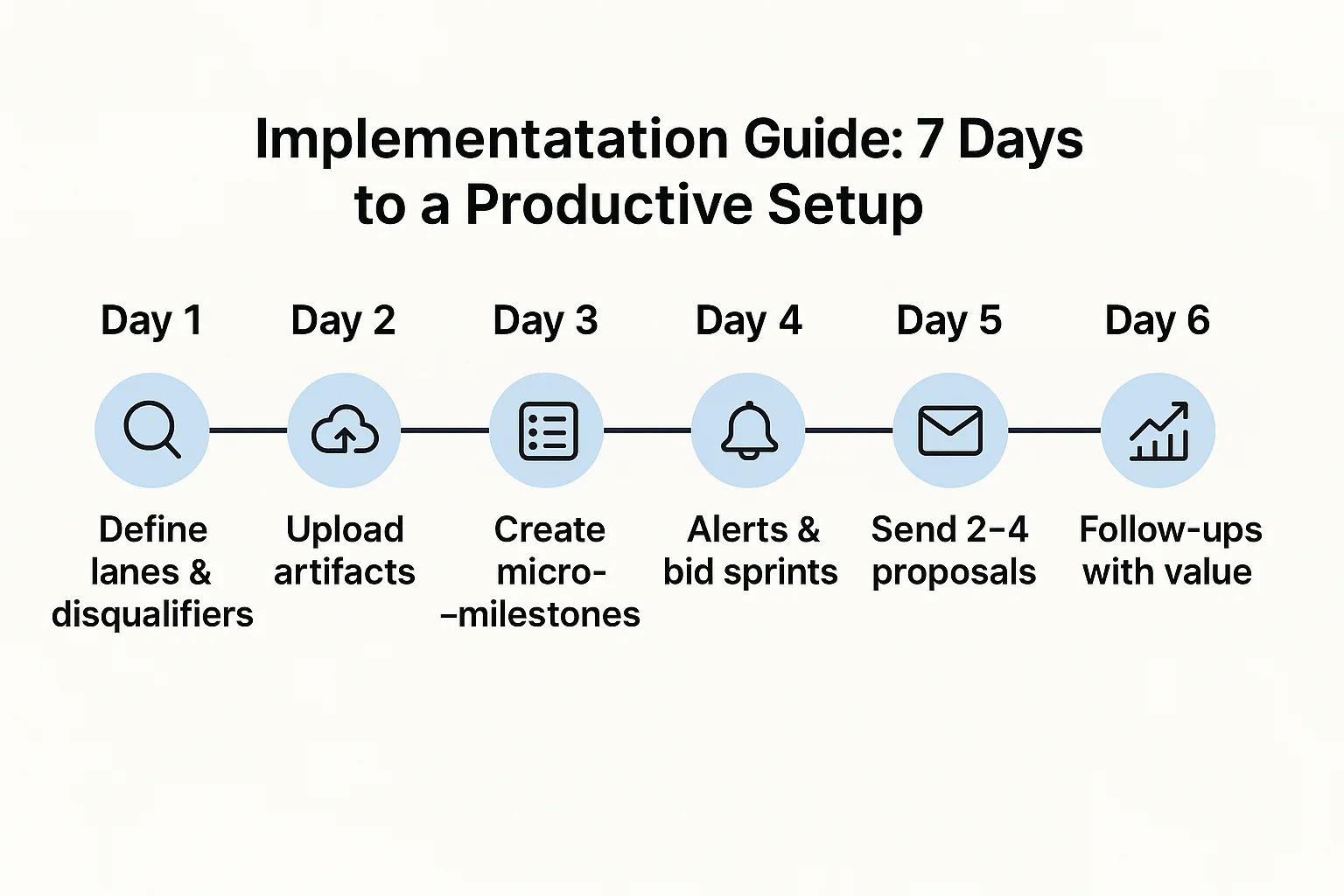

Implementation Guide: 7 Days to a Productive Setup

Day 1 — Define lanes & disqualifiers.

Write 2–4 lanes you actually sell. Add budget floors, regions, and 5–10 negative keywords per lane.

Day 2 — Upload artifacts.

One Loom and one before/after screenshot per lane. Short, outcome-titled (“PDP LCP Fix — 80s Loom”).

Day 3 — Micro-milestones (“Done = …”).

Create acceptance-criteria lines in client language for each lane (e.g., “Done = LCP < 2.8s, CLS < 0.1 on PDP mobile test pages; rollback notes.”).

Day 4 — Alerts & sprints.

Wire gigradar job alerts: P1 real-time, P2 digests. Block 2–3 bid sprints in your calendar.

Day 5 — Send 2–4 proposals.

Use the gigradar ai bidder to draft; keep the opener phone-length, attach one artifact, and include Done = ….

Day 6 — Follow-ups with value.

T+24h risk note, T+72h 2-slide plan. No “just checking in.”

Day 7 — Review with gigradar analytics.

Check reply/shortlist by lane and timing. Tighten one negative keyword list; test proof-first vs plan-first next week.

You now have a repeatable loop that compounds.

To keep that momentum, it helps to think about how the loop fits into your wider client-acquisition funnel — this guide breaks down a proven Upwork agency funnel step by step.

Tips for Senior-Quality Messages (Even When You’re Fast)

- Mirror the buyer: Reuse the post’s wording in Done = … to reduce friction.

- Be single-minded: One artifact only; two matched samples attached at most.

- Name your milestone by outcome: “Fix Pack & Validation,” not “Week 1.”

- Offer choices, not essays: A small fixed slice, or hourly with a cap for exploration.

- Stay policy-safe: Keep everything on-platform; never promise off-platform workarounds.

These habits make your brand look calm and trustworthy—AI can draft them, but only you can enforce them.

Final Thoughts

AI doesn’t replace your judgment; it amplifies it. The magic of gigradar is boring on purpose: watch everything, surface only what fits, help you sound precise, and learn from outcomes. With tuned gigradar job alerts, a reliable gigradar ai bidder workflow, and clear insights from gigradar analytics, agencies can spend less time refreshing feeds and more time closing the right work.

Build clean lanes, keep acceptance criteria front-and-center, and iterate weekly. The result is a quiet, compounding edge—faster replies, better shortlists, and wins that look exactly like the work you want to do next.

.avif)

.png)

.webp)