TL;DR

You don’t need a PhD—or fancy tools—to a/b test upwork proposals. Pick one variable (opener, CTA, proof), run two variants in parallel for 1–2 weeks, tag every send, and track a few core metrics (reply rate, interview rate, win rate, time-to-reply, revenue per proposal). Make decisions weekly: keep the winner, kill the loser, and test the next idea. That’s it. Treat it like product iteration, not lab science, and your proposal analytics will start compounding.

Why test your proposals at all?

Speed and fit matter on Upwork, but message quality decides who gets the reply. Tiny changes to the first 150–220 words can move conversion by double digits—especially when you’re submitting to fresh posts. Systematically test proposals upwork to:

- Raise reply and interview rates without increasing volume or Connect spend.

- Learn what each lane (dev, design, SEO, data, mobile) responds to.

- Build a repeatable library of proven patterns your team can use.

- Protect your energy: fewer, better proposals beat spray-and-pray.

Think of upwork proposal experiments as weekly sprints to remove guesswork.

Looking for more ways to feed your A/B tests with qualified leads?

Check our guide to proven lead generation tools for freelancers and agencies.

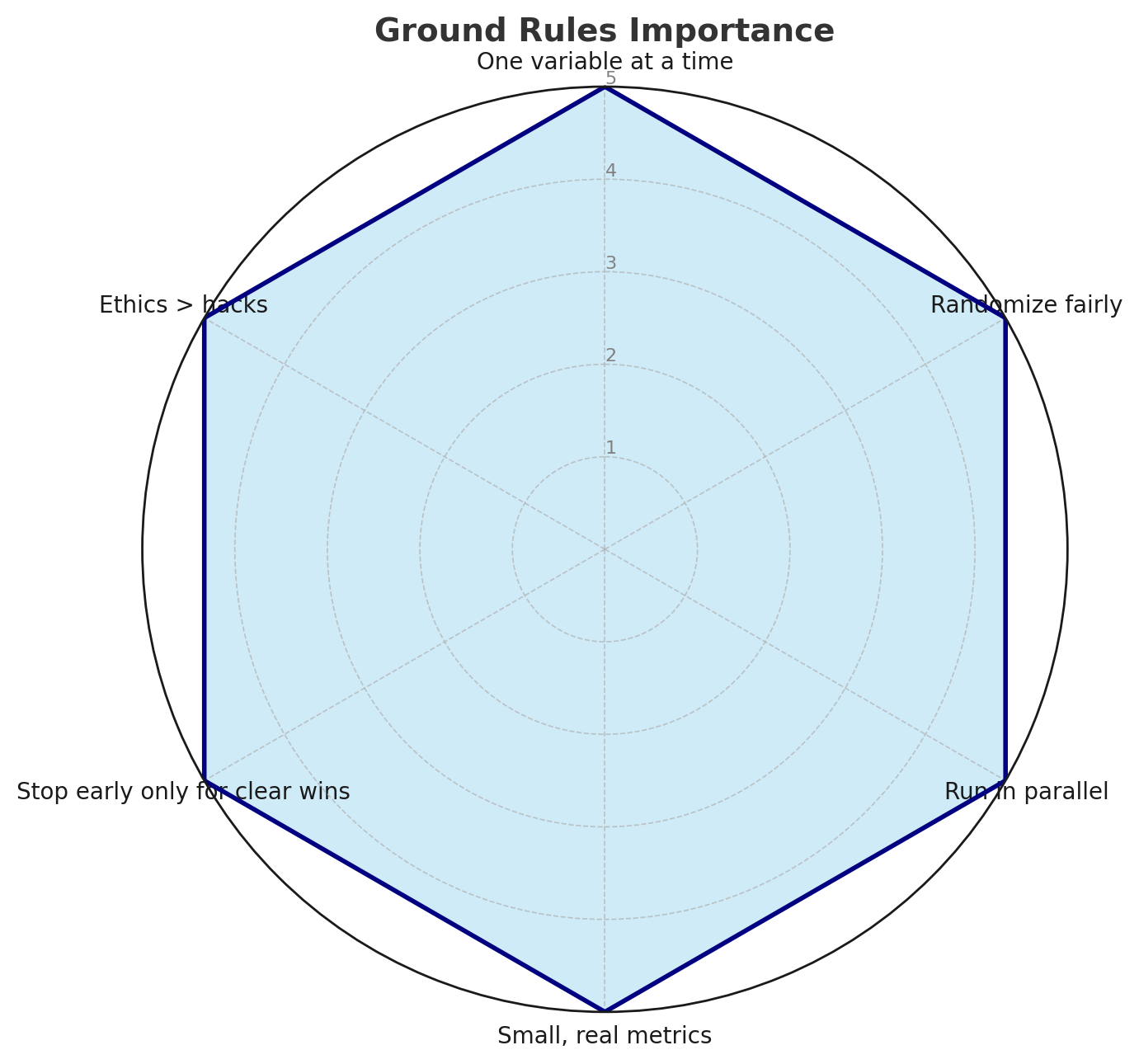

Ground rules (so you don’t fool yourself)

- One variable at a time. Change only the opener or CTA or proof—never all three.

- Randomize fairly. Alternate variants by day or job index (odd/even) to reduce bias.

- Run in parallel. Don’t do Variant A this week and B next week; conditions shift.

- Small, real metrics. Replies and interviews are leading indicators; wins and revenue confirm.

- Stop early only for clear wins. Otherwise, let the test run to your sample goal.

- Ethics > hacks. No exaggeration, no bait-and-switch. Testing is about clarity and fit, not trickery.

The step-by-step plan

Step 1 — Choose your North Star metric

Pick one primary metric for decisions, plus a couple of secondaries.

- Primary: Reply rate (replies ÷ proposals) or Interview rate (interviews ÷ proposals).

- Secondary: Win rate (hires ÷ proposals), Time-to-first-reply (minutes/hours), Revenue per proposal (optional but powerful).

Make it consistent across tests. Your proposal analytics will be much easier to read.

Step 2 — Create a simple tracker

You can use a sheet, Notion, or CRM. Minimum columns:

- Date/time sent

- Job link & title

- Lane (e.g., “Shopify CRO,” “React dashboards”)

- Post age when sent (minutes)

- Proposals so far (“<5”, “5–10”, “10+”)

- Variant (A or B)

- Opener type (plan-first / proof-first / question-led)

- Micro-milestone yes/no + Done = … included

- Proof artifact included (Loom/screenshot/spec) yes/no

- Reply? (Y/N) — timestamp

- Interview? (Y/N) — date

- Hire? (Y/N) — value

- Notes

This is enough to run solid proposal analytics without drowning in data.

Step 3 — Pick one variable to test

Start with the highest-leverage elements:

- Opener: plan-first vs proof-first vs question-led

- CTA: call vs async 2-slide plan; binary question vs open ask

- Micro-milestone: with/without, or different Done = … phrasing

- Proof: Loom vs screenshot vs spec snippet

- Length: 150–180 words vs 220–260 words

- Timing: respond within 30 minutes vs 2–4 hours (when feasible)

- Attachment strategy: exactly two samples vs portfolio link only

You’ll a/b test upwork proposals faster if you prioritize variables near the top of your message.

Step 4 — Draft Variants A and B

Variant A (control)

Two details stood out: {{specific_1}} and {{specific_2}}. I’d start with a 3-day milestone so you can see progress fast: Done = {{acceptance_criteria}}.

Recent: {{result}} for a {{industry}} project (60-sec Loom). I’m {{timezone}} with {{overlap}} overlap. Prefer a 10-minute call, or I can send a 2-slide plan today—your pick.

Variant B (example change: proof-first + binary CTA)

Shipped {{similar_result}} last month using {{stack}} (short Loom). For {{goal}}, I’d start with {{micro_step}}—Done = {{acceptance_criteria}} this week.

Do you prefer Option A (faster, narrower) or Option B (deeper, more flexible)? I’ll draft the milestone around your pick.

Keep everything else identical. That’s a clean test.

Step 5 — Randomize fairly

Pick a simple rule so you don’t “choose” where to use the better-sounding variant:

- Odd/even rule: If the job ID or posted minute is odd → A; even → B.

- Day rule: Even-numbered days → A; odd → B.

- Queue rule (teams): Alternate A/B as jobs get triaged P1.

Consistency beats perfection; you just need to avoid cherry-picking.

Step 6 — Set a sample goal and timebox

A lightweight, realistic rule: collect at least 30–50 proposals per variant or run for two weeks, whichever happens first. Smaller accounts can go with 20 per variant to keep momentum, then confirm in a follow-up test.

Why not wait for “statistical significance”? You’re operating in a marketplace with moving parts (category, budget, post age). Aim for directionally correct, repeatable wins, not academic certainty.

Step 7 — Run the test (and protect quality)

- Stick to your lanes; don’t test on bad-fit jobs just to hit sample size.

- Keep your “specificity-or-skip” rule. If you can’t cite two details from the post, don’t send.

- Cap daily sends (solo: 3–6; per seat: 2–4) to avoid burnout.

Step 8 — Analyze simply

After you hit your sample goal, calculate for each variant:

- Reply rate = replies ÷ proposals

- Interview rate = interviews ÷ proposals

- Median time-to-first-reply (smaller is better)

- Win rate = hires ÷ proposals

- RPP (revenue per proposal) = revenue ÷ proposals (if you track value)

If Variant B beats A by ≥20% on your primary metric (or saves ≥30% time-to-reply) and doesn’t harm win rate, adopt B. If results are mixed, keep A and test a different variable.

Step 9 — Ship, document, and iterate

- Update your “Best Practices” doc with the new winner.

- Record the loser and why you think it lost (so you don’t retry it in six weeks).

- Queue the next single-variable test.

That rhythm—test → decide → document → repeat—is where compounding lives.

What to test (ideas that usually move numbers)

- Opener framing

- Plan-first: lead with a tiny plan and Done = …

- Proof-first: lead with one result + artifact

- Question-led: one binary question (A or B?) to invite an easy reply

- Plan-first: lead with a tiny plan and Done = …

- Micro-milestone clarity

- Acceptance criteria verbatim from the post vs your standard phrasing

- 3-day slice vs 5-day slice

- Deliverable labeled “audit/spec/prototype” vs generic “first milestone”

- Acceptance criteria verbatim from the post vs your standard phrasing

- Proof format

- Loom (60–90s) vs before/after screenshot vs 1-page spec snippet

- “One artifact” rule vs “two small artifacts” (careful not to look spammy)

- Loom (60–90s) vs before/after screenshot vs 1-page spec snippet

- CTA style

- “10-minute call” vs “2-slide async plan today”

- Binary question (“Option A or B?”) vs open question (“Want the outline?”)

- “10-minute call” vs “2-slide async plan today”

- Length & layout

- 160–180 words vs 220–260 words

- Bullets in the micro-plan vs inline sentences

- 160–180 words vs 220–260 words

- Timing

- Under 30 minutes from posting vs 1–3 hours

- Within the buyer’s business hours vs off-hours

- Under 30 minutes from posting vs 1–3 hours

- Attachments

- Exactly two matched samples vs portfolio link only

- Category-specific sample vs generic “top project”

- Exactly two matched samples vs portfolio link only

Each idea is a clean, single variable for upwork proposal experiments.

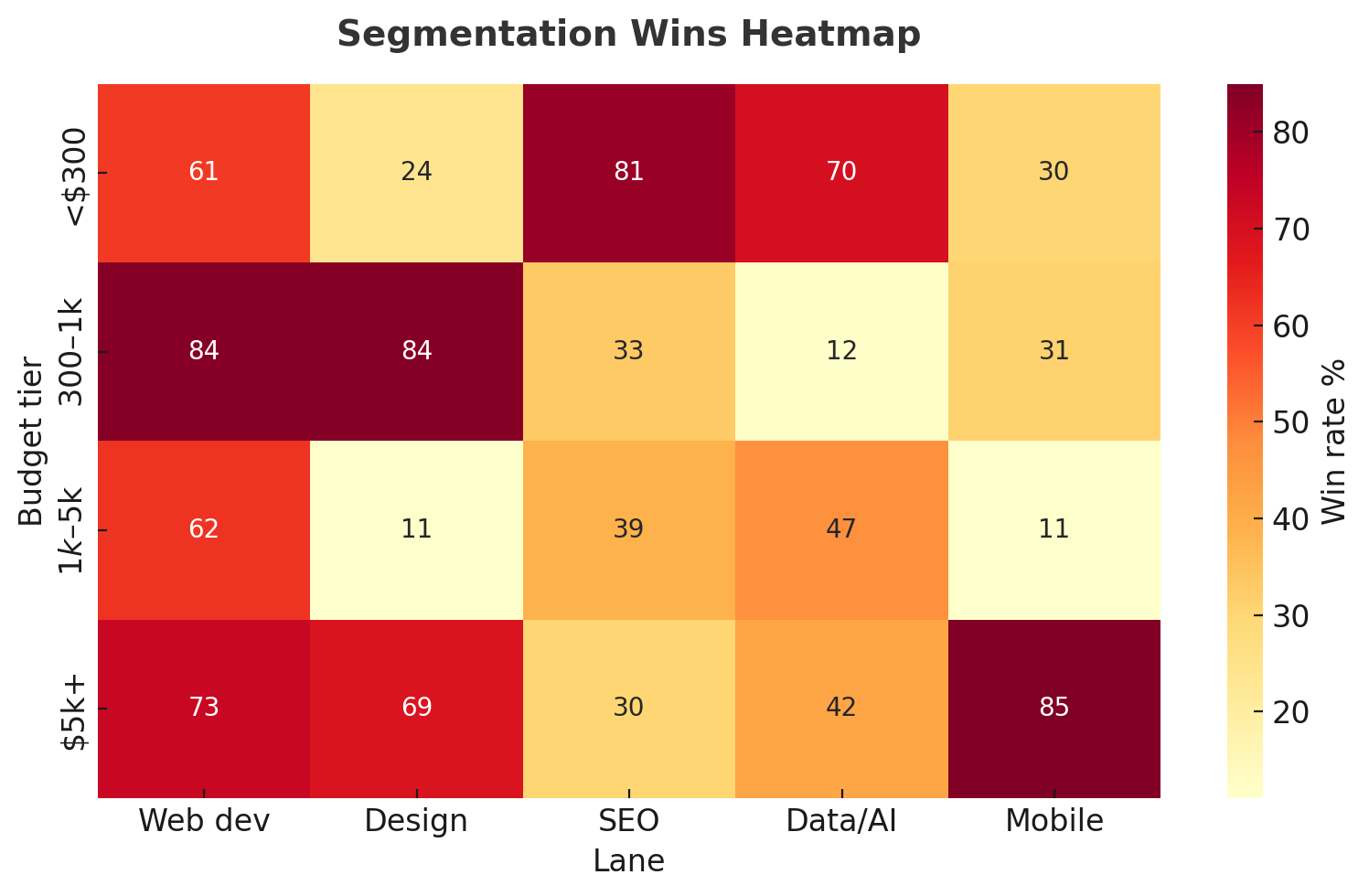

Segmentation: don’t average away the truth

Wins often hide inside lanes. Segment your proposal analytics by:

- Lane: web dev, design, SEO, data/AI, mobile

- Budget tier: <$300, $300–$1k, $1k–$5k, $5k+

- Post age: <60 minutes vs 60–240 minutes vs same day

- Proposals so far: “<5” vs “5–10” vs “10+”

- Client signal: payment verified + hires vs brand new

A variant that crushes in “React dashboards, <$1k, <60m” might do nothing in “B2B content, $1k+.”

Stats without drama (the 5-minute check)

If you want a lightweight sanity check:

- Compute reply rate for A and B (e.g., A = 7/40 = 17.5%; B = 13/40 = 32.5%).

- If the difference is ≥10 percentage points and both have ≥20 sends, it’s usually a keeper.

- If close (e.g., 22% vs 26%), rerun with a new batch or test another variable.

You can use a two-proportion test if you like, but don’t let math delay momentum.

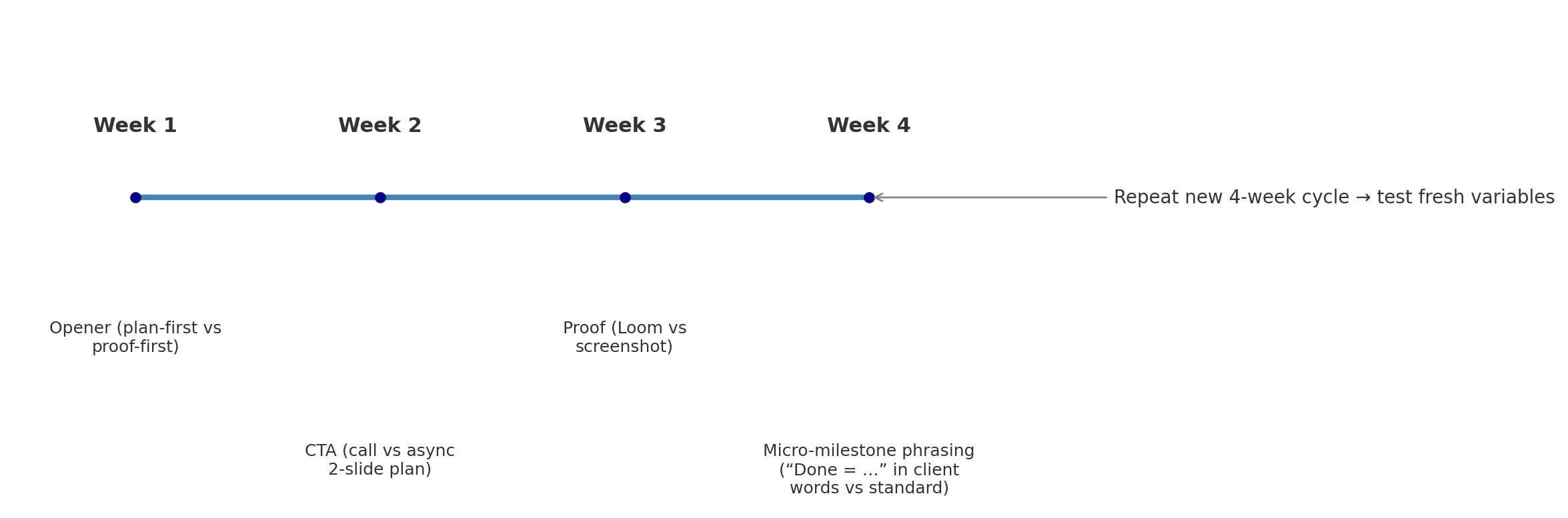

A 4-week testing calendar (repeatable)

- Week 1: Opener (plan-first vs proof-first)

- Week 2: CTA (call vs async 2-slide plan)

- Week 3: Proof (Loom vs screenshot)

- Week 4: Micro-milestone phrasing (“Done = …” in client words vs standard)

Keep what wins. Archive what loses. Start a new 4-week cycle with fresh variables.

Solo vs agency: how to scale testing

Solo operator

- Keep one lane focus and one test at a time.

- Use a short morning + afternoon bid sprint; log variants right after sending.

- Review on Friday; change one thing the following Monday.

Agency team

- Assign lane owners and standardize variant libraries (A/B snippets + proof artifacts).

- PM/VA runs triage and tags variants; closers customize and send.

- QA gate: two specifics from the post, micro-milestone with Done = …, one proof, one CTA.

- Weekly ops review: per-lane reply/interview/win rates; retire low performers.

This turns a/b test upwork proposals into a calm, continuous habit across the team.

Want to see how disciplined testing can translate into real revenue?

Read how a branding & web-development agency hit $600K growth on Upwork with GigRadar.

Three example experiments (with likely outcomes)

- Plan-first vs Proof-first in Shopify CRO

- Hypothesis: Proof-first wins (ecom buyers want results fast).

- Result pattern: Reply +20–40% for proof-first when the artifact is a mobile LCP before/after.

- Keep: Proof-first; follow with a tight micro-milestone.

- Hypothesis: Proof-first wins (ecom buyers want results fast).

- Binary CTA vs open CTA in B2B content

- Hypothesis: Binary CTA (“Outline today or call?”) lifts replies.

- Result pattern: +10–25% replies, especially when client is verified and post is <3 hours old.

- Keep: Binary CTA.

- Hypothesis: Binary CTA (“Outline today or call?”) lifts replies.

- Loom vs screenshot in data/AI

- Hypothesis: Short Loom builds trust better than a static chart.

- Result pattern: Interviews rise 10–20%; wins unchanged until you add a clear “decision memo” promise.

- Keep: Loom + promise a 1-page decision memo.

- Hypothesis: Short Loom builds trust better than a static chart.

These are common wins—validate in your lanes.

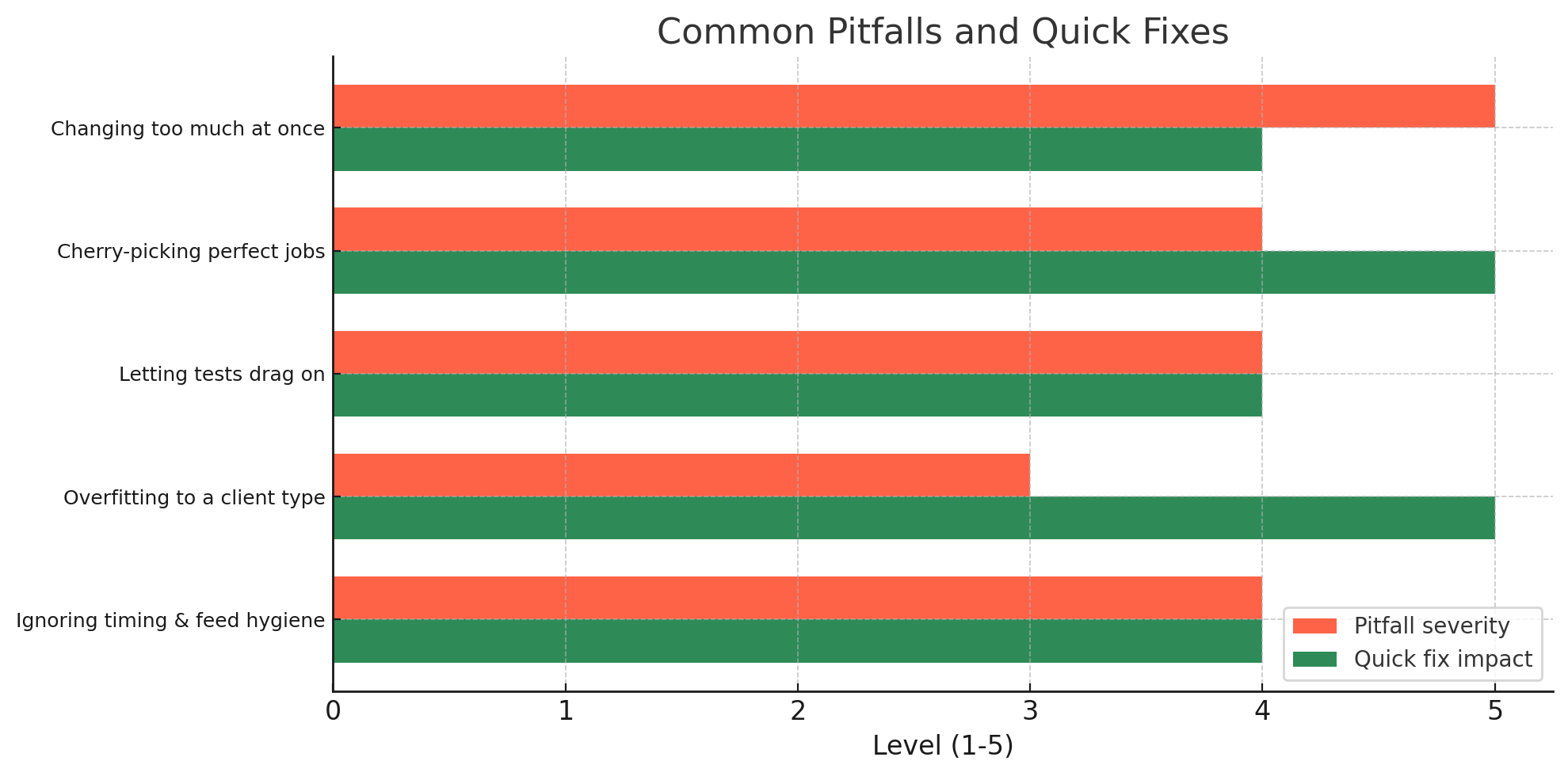

Common pitfalls (and quick fixes)

- Changing too much at once.

Fix: Freeze everything but the variable; use snippets to keep discipline. - Cherry-picking “perfect” jobs for your favorite variant.

Fix: Stick to your randomization rule. - Letting tests drag on forever.

Fix: Timebox to two weeks or 30–50 sends per variant. - Overfitting to a single client type.

Fix: Segment, then confirm the win in the next cycle. - Ignoring timing and feed hygiene.

Fix: Clean saved searches; respond inside a sprint schedule.

Copy-and-paste assets

Variant tags

Keep it simple: write “A” or “B” in your tracker—not in the proposal text. Internally, name snippets like OPEN_A_plan_first / OPEN_B_proof_first.

Opener library (fill-in)

- Plan-first (A):

“Two details stood out: {{specific_1}} and {{specific_2}}. I’d start with a 3-day milestone: Done = {{acceptance_criteria}} so we both know where ‘good’ ends.” - Proof-first (B):

“Shipped {{similar_result}} last month (60-sec Loom). For your {{goal}}, first step is {{micro_step}}—Done = {{acceptance_criteria}} this week.”

CTA library

- Call vs async: “Prefer a 10-minute call, or I can send a 2-slide plan today—your pick.”

- Binary choice: “Do you prefer Option A (faster, narrower) or Option B (deeper, more flexible)?”

Micro-milestone phrasing

- “Done = {{metric target}} on {{pages/flows}} with a Loom walkthrough and rollback plan.”

- “Done = {{deliverable}} approved and {{N}} tasks validated in {{tool}}.”

A one-week starter plan

- Day 1: Set your tracker and choose your North Star metric.

- Day 2: Draft Variant A (plan-first) and Variant B (proof-first).

- Day 3–6: Run the test using odd/even randomization; cap sends; log outcomes.

- Day 7: Review proposal analytics; keep the winner; pick the next variable (CTA or proof format).

Repeat weekly. In a month you’ll have four cleaner plays and a calmer pipeline.

Final thoughts

To a/b test upwork proposals well, you don’t need complex stats—you need discipline. Keep variables singular, randomize fairly, measure the basics, and decide quickly. Over a few cycles, your upwork proposal experiments will reveal a personal playbook for your lanes. That’s how you sustainably test proposals upwork: fewer guesses, more replies, and a proposal system that gets better every week.

.avif)

.png)

.webp)