Agencies live or die by three conversion points: Reply → Shortlist (Interview) → Win (Hire). A healthy baseline for focused agencies is reply 20–35%, shortlist 10–20%, and win 5–12% measured over rolling 60–90 days and segmented by lane and budget tier. Use a clean tracker, send targeted proposals inside the first hour with a tiny milestone (with Done = … acceptance criteria), and review metrics weekly. This article gives you practical upwork proposal benchmarks, a defensible upwork reply rate benchmark, a lane-aware upwork win rate benchmark, and the exact scripts, formulas, and review cadence to improve each.

Definitions (so your numbers actually mean something)

Before you compare numbers, standardize language and measurement windows:

- Reply rate: % of proposals that get any substantive client reply (not just auto-responses).

Formula: Replies ÷ Proposals sent. - Shortlist (Interview) rate: % of proposals that lead to an interview request, shortlist, or detailed scoping thread.

Formula: Shortlists ÷ Proposals sent. - Win rate: % of proposals that convert to a funded contract.

Formula: Wins ÷ Proposals sent. - Proposal-to-contract time: days from first send to funded milestone.

- RPP (Revenue per Proposal): total revenue divided by proposals sent—your compounding metric.

- Speed-to-lead: minutes from job posting to your proposal submission.

- Post age at send: how old the job was when you proposed (e.g., “<60 min”).

- Proposals already submitted: the marketplace counter at send time (“<5”, “5–10”, “10+”).

Track all of these by lane (web dev, UI/UX, SEO/content, data/AI, mobile), budget tier, and sender (if you have a team). That’s how you turn raw numbers into decisions.

Global upwork proposal benchmarks (agency-focused)

Treat these as pragmatic yardsticks, not gospel. They assume: targeted lanes, specific openers, one proof artifact, and proposals sent mostly within a few hours of posting.

Starting baseline (competent, focused agency):

- Reply: 20–35%

- Shortlist: 10–20%

- Win: 5–12%

- Proposal-to-contract time: 3–14 days

- RPP: Highly variable, but aim for $80–$250+ per proposal in mixed lanes within 60–90 days

Stretch targets (dialed-in ICP, strong artifacts, tight ops):

- Reply: 30–45%

- Shortlist: 18–30%

- Win: 10–20%

- Proposal-to-contract time: 2–7 days

- RPP: $250–$800+ per proposal in mid/high-ticket lanes

These ranges are the practical heart of upwork proposal benchmarks for agencies. If you’re far below them after 60 days with 50+ sends, your inputs (fit, speed, messaging) need work; if you’re above, you likely have room to raise price or tighten scope.

Case in point: How a digital marketing agency cut lead-response time by 90% with GigRadar on Upwork — proof that hitting (and beating) these reply and win benchmarks is absolutely doable.

By budget tier (how money changes the math)

Smaller budgets often reply more (volume), but close less (noise). Larger budgets reply less (busy buyers), but when they engage, they convert.

- <$500: Reply 25–40%, Shortlist 10–18%, Win 5–10%

Use case: quick fixes, audits, prototyping. Great for artifact creation, not margin. - $500–$2k: Reply 22–35%, Shortlist 12–22%, Win 6–12%

Use case: “first-mile” packages; fast approvals if scope is crisp. - $2k–$10k: Reply 18–28%, Shortlist 12–25%, Win 8–18%

Use case: best agency zone; micro-milestones help de-risk. - >$10k: Reply 10–20%, Shortlist 12–24%, Win 10–20%

Use case: multi-milestone builds; expect longer threads and procurement.

Set goals per tier so you aren’t punishing a high-ticket lane for not behaving like small tasks.

By lane (category affects your upwork reply rate benchmark and wins)

Typical ranges when you’re sending targeted, well-structured proposals:

- Web development (Shopify/Next/React): Reply 20–35% | Shortlist 12–22% | Win 6–12%

- UI/UX & Product Design: Reply 25–40% | Shortlist 15–28% | Win 7–15%

- SEO & Content: Reply 20–35% | Shortlist 12–24% | Win 6–12%

- Data/AI & Analytics: Reply 15–30% | Shortlist 10–22% | Win 5–12%

- Mobile (iOS/Android): Reply 18–32% | Shortlist 12–22% | Win 6–12%

Your upwork reply rate benchmark should reflect your ICP and artifacts. A UI/UX team with punchy visuals often out-replies a data agency—but both can win at similar rates if their scopes are crisp.

How to calculate cleanly (and avoid self-sabotage)

- Use rolling windows. 30 days is noisy; 60–90 days with 50–150 proposals tells the truth.

- Exclude auto-withdrawn or accidental sends from the denominator.

- Define “reply” as a substantive message (clarifying questions, interview request, or scope talk).

- Attribute multi-sender proposals to the owner who wrote/approved the message.

- Segment before judging. Don’t average lanes or budget tiers; it hides wins and problems.

Formulas to paste into your sheet:

- Reply rate = COUNTIF(Status, "Replied") / COUNTIF(Status, "Sent")

- Shortlist rate = COUNTIF(Stage, "Interview/Shortlist") / COUNTIF(Status, "Sent")

- Win rate = COUNTIF(Stage, "Funded") / COUNTIF(Status, "Sent")

- RPP = SUM(Revenue) / COUNTIF(Status, "Sent")

Inputs that move each metric (playbooks)

Lifting reply rate (get seen and answered)

- Speed matters: aim to submit within ≤60 minutes of posting for P1 fits; set three “bid sprints” daily.

- Two specifics: reference two details from the post in line 1–2.

- Micro-milestone: propose a 3–5 day first slice with Done = … acceptance criteria in the client’s wording.

- One proof artifact: a 60–90s Loom or before/after screenshot—one, not ten.

- Choice-based CTA: “10-minute call or a 2-slide plan—your pick.”

This is the backbone of a credible upwork reply rate benchmark.

Lifting shortlist rate (create confidence fast)

- Answer unstated risks: add one sentence on guardrails (QA, rollback, privacy).

- Name the approver: ask who signs off weekly; shows you think in processes.

- Clarify constraints: stack, access, brand, compliance—invite specifics.

- Rename milestones by outcome: “Fix Pack & Validation” beats “Week 1.”

Related guide: Compliance and security for Upwork agencies — simple ways to bake trust and data safety into every proposal.

Lifting win rate (from “interested” to “funded”)

- Options, not haggling: A) discovery slice, B) fixed first mile, C) hourly with a cap.

- Change requests line: simple A/B/C path: swap scope, add milestone, or hourly cap.

- Evidence + acceptance: paste Done = … into the milestone text; attach proof on delivery.

- Follow-up with value: T+24h risk note; T+72h 2-slide plan or mini-mock—no “just checking in.”

Together, these habits push your upwork win rate benchmark into the stretch zone.

Your minimal tracker (columns to copy)

- Date/time sent

- Job title & link

- Lane (Web Dev, UI/UX, SEO, Content, Data/AI, Mobile)

- Budget tier (<$500, $500–$2k, $2k–$10k, >$10k)

- Post age at send (min) / proposals already submitted (“<5”, “5–10”, “10+”)

- Variant tag (A/B) if you’re testing opener or CTA

- Micro-milestone included? (Y/N)

- Proof attached? (Loom/Screenshot/Spec/None)

- Reply? (Y/N) — timestamp

- Shortlist? (Y/N) — date

- Win? (Y/N) — amount & date

- RPP (auto)

- Notes (objections, red flags, why lost)

Review weekly. The clarity alone often improves performance.

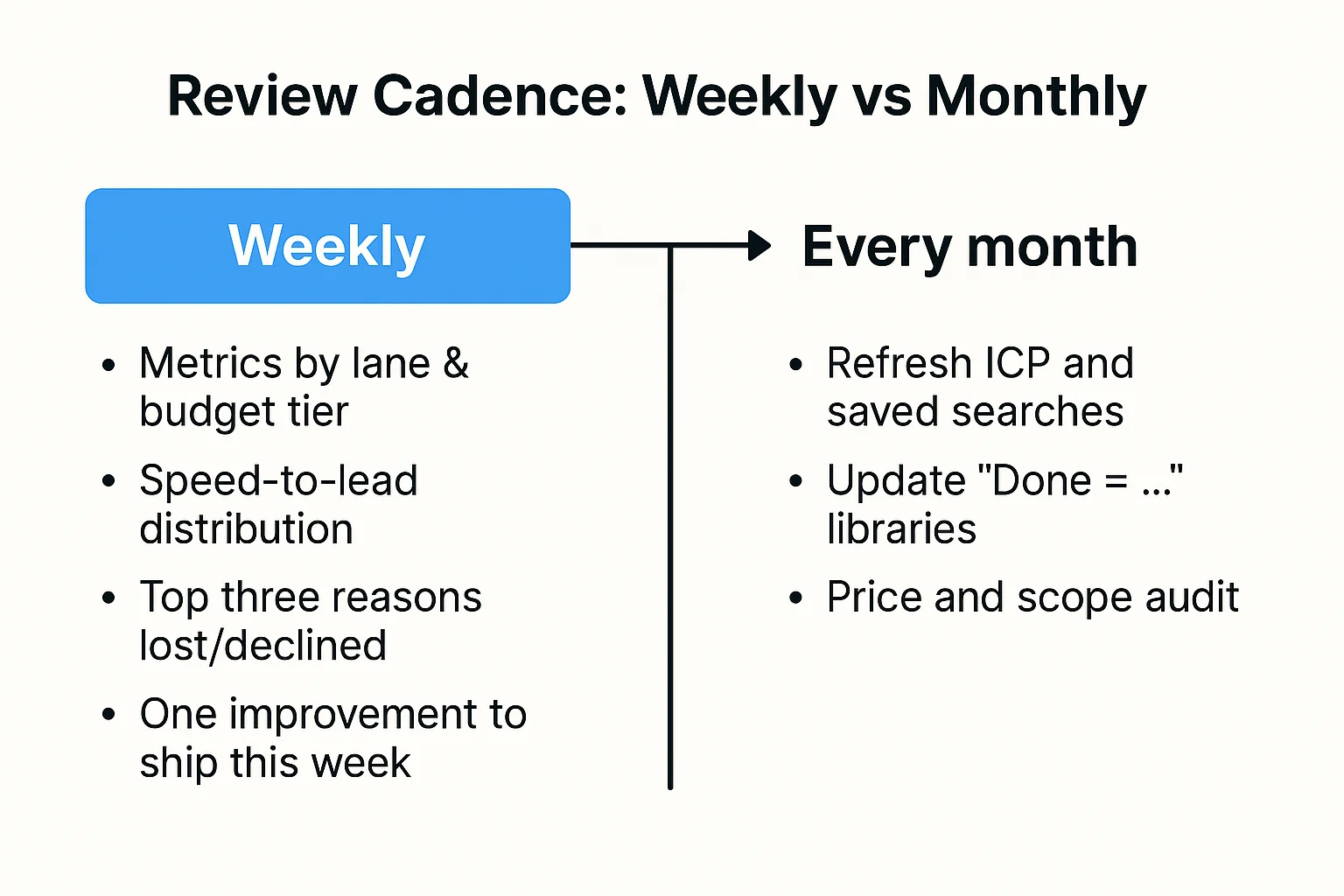

The review cadence (agencies need rhythm)

Weekly (30–45 minutes):

- Metrics by lane & budget tier: reply, shortlist, win, RPP.

- Speed-to-lead distribution (what % under 60 minutes?).

- Top three reasons lost/declined (budget, timing, scope, trust).

- One improvement to ship this week (e.g., new Loom proof, better negative keywords, tighter micro-milestone wording).

Monthly (60 minutes):

- Refresh ICP and saved searches; cut a low-yield search.

- Update “Done = …” libraries and artifacts.

- Price and scope audit: can you move small wins into higher tiers?

Consistency beats heroics.

A/B testing (lightweight but real)

- Change one variable: opener style (plan-first vs proof-first), CTA (call vs 2-slide plan), or proof (Loom vs screenshot).

- Randomize simply: odd job index = A, even = B.

- Sample goal: 30–50 proposals per variant or 2 weeks, whichever first.

- Decide: keep only if ≥20% lift on primary metric without hurting wins.

This keeps your upwork proposal benchmarks improving instead of drifting.

Example messages that lift each stage

Reply (first message after the client replies):

Two details stood out: {{specific_1}} and {{specific_2}}. I’d start with a 3–5 day slice: Done = {{acceptance_criteria}} so we both know where “good” ends. Recent: {{result}} (60–90s Loom). Prefer a 10-minute call, or I can send a 2-slide plan today—your pick.

Shortlist (clarifier with confidence):

To make Milestone 1 exact: 1) access to {{repo/CMS/analytics}}? 2) who approves weekly? 3) any hard constraints (stack/brand/compliance)? I’ll tune Done = {{criteria}} around those.

Win (proposal handoff in Upwork):

Milestone 1 ({{days}}): {{deliverable}} — Done = {{criteria}} (${{amount}}). Evidence: {{artifact}} + validation steps. Change requests: swap scope, add milestone, or hourly with a cap—your choice.

30/60/90 goals (agency edition)

30 days (stabilize inputs):

- Build tracker; define lanes and budget tiers; set three daily bid sprints.

- Send proposals mostly within ≤60 minutes for P1 fits.

- Baselines: Reply ≥20%, Shortlist ≥10%, Win ≥5%, RPP ≥$80.

60 days (systemize quality):

- Add a “Done = …” library and a fresh Loom/screenshot per lane.

- A/B test opener or CTA; enforce one-proof rule.

- Targets: Reply ≥25–30%, Shortlist ≥12–18%, Win ≥7–10%, RPP ≥$150.

90 days (optimize & scale):

- Tighten ICP (kill a weak sublane); raise first-mile pricing; standardize follow-ups.

- Targets: Reply ≥30–35%, Shortlist ≥18–25%, Win ≥10–15%, RPP ≥$250+.

Revisit these quarterly; reset as you move upmarket.

Diagnosing common patterns (and fixes)

- Replies low, everything else okay: You’re too slow or too generic. Fix saved searches, add negative keywords, and push the two-specifics + micro-milestone opener.

- Replies okay, shortlists weak: Scope still fuzzy. Add a crisp Done = …, ask who approves, and show one risk-mitigation line.

- Shortlists okay, wins weak: Price/packaging mismatch. Offer options (A/B/C) and paste acceptance criteria into the milestone text.

- Wins okay, RPP low: Raise price on first-mile; aim for mid-tier budgets; trim lanes.

- Cycle time long: Speed up clarifiers and access; propose smaller first slices.

Copy-ready tracker skeleton (paste into Sheets/Notion)

- Sent (Date/Time)

- Lane | Budget tier | Country/Timezone

- Post age @ send | “Proposals already”

- Variant (A/B) | Proof type (Loom/Shot/Spec) | Micro-milestone (Y/N)

- Reply? (timestamp) | Shortlist? (date) | Win? (amount)

- Speed-to-lead (min) | Proposal-to-contract (days)

- RPP (auto) | Notes (why lost/won, objections)

Turn it into a weekly dashboard with sparklines for reply/shortlist/win.

Final thoughts

Benchmarks aren’t bragging rights—they’re feedback loops. Set lane-specific upwork proposal benchmarks, adopt a practical upwork reply rate benchmark based on your ICP and artifacts, and commit to a realistic upwork win rate benchmark that respects deal size and cycle time. Then run the system: clean inputs, fast targeted messages, crisp Done = … milestones, one proof, and a value-adding follow-up. Review weekly, improve one lever at a time, and let compounding do the rest.

.avif)

.png)

.webp)