For agencies, the best results rarely come from choosing one camp in the upwork ai bidder vs human debate. AI is unbeatable for speed—monitoring feeds, drafting first passes, and organizing follow-ups. Humans win on nuance—qualification, scoping, pricing, risk, and relationship building. Treat bidding as a pipeline: let an AI/automation layer gather and draft, let a VA triage and prep, and let a senior “closer” personalize, price, and send. This hybrid outperforms pure automated bidding upwork or human-only approaches on most agency accounts.

First principles: what “AI” and “human” actually mean here

When people say “AI bidder,” they usually mean a workflow (often with a language model) that:

- Watches saved searches and alerts.

- Parses new posts for scope, stack, budget, and risks.

- Generates job briefs and first-draft proposals.

- Schedules reminders for follow-ups and handoffs.

A “human bidder” can be you (the principal), a trained account manager, or a virtual assistant. Agencies sometimes hire bidding VA Upwork to triage jobs, prep snippets, and submit under supervision.

The question isn’t whether robots replace people. It’s how to design a system where machines do boring, repeatable steps and people do judgment calls. That’s the real conversation behind ai vs human proposals upwork.

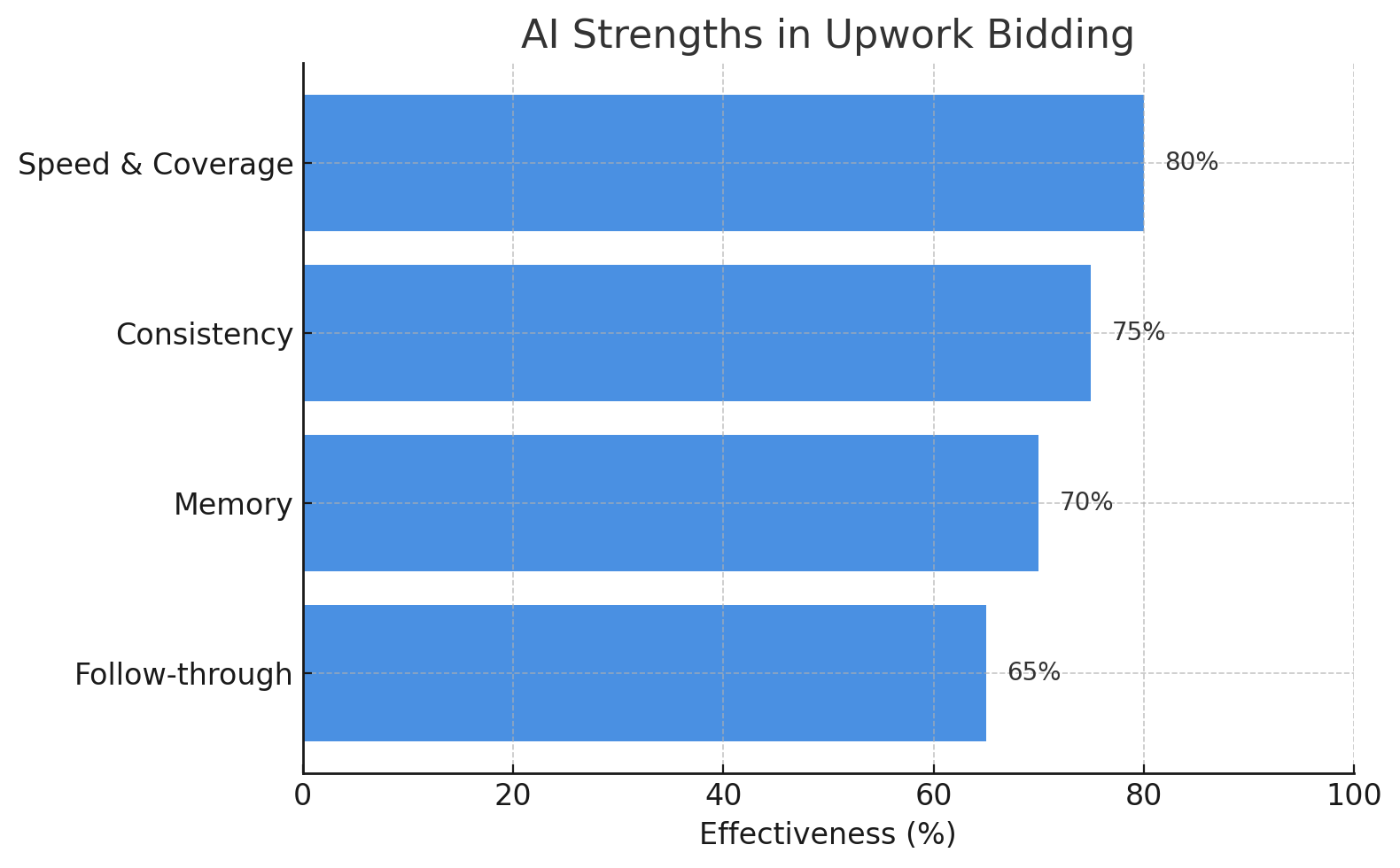

Where AI outshines people (and where it doesn’t)

AI strengths

- Speed & coverage: Scan the upwork job feed in minutes, not hours.

- Consistency: Every draft follows your structure and tone guide.

- Memory: Keeps a library of case snippets, metrics, and assets to slot into templates.

- Follow-through: Never “forgets” to nudge a promising lead.

AI limits

- Context leaps: Subtle scope clues, hidden risk, and politics around stakeholder alignment.

- Scoping & pricing finesse: Turning fuzzy asks into firm milestones and fair budgets.

- Credibility cues: Real-time rapport, reading a client’s tone, and handling objections.

- Platform nuance: Some posts require clarifying questions or portfolio curation that a human does better.

Bottom line: let AI earn you time; let humans earn you trust. That’s the essence of upwork ai bidder vs human done right.

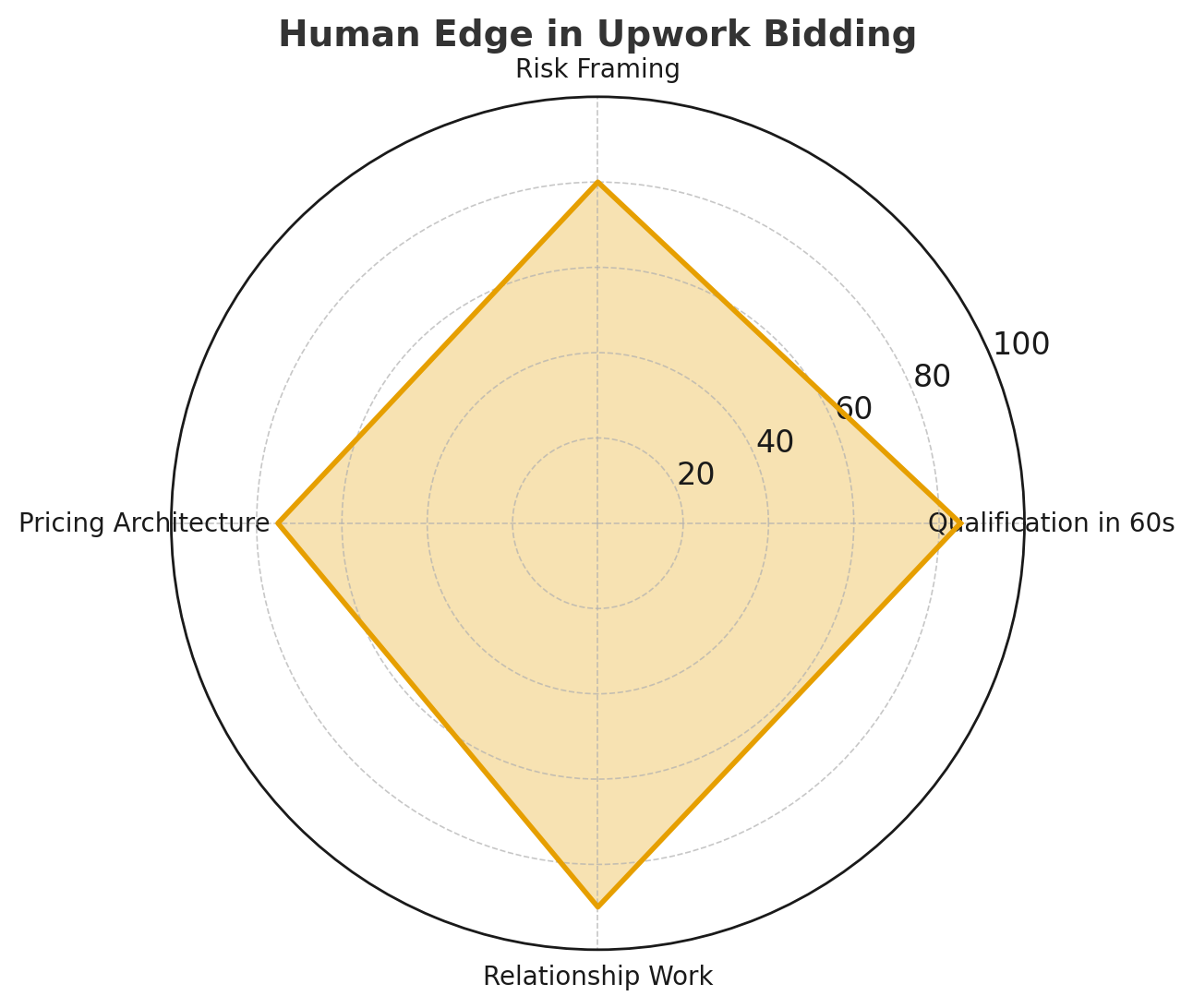

The human edge you shouldn’t automate

- Qualification in 60 seconds: Fit, scope clarity, budget reality, and client signals.

- Risk framing: “Here’s the fastest way to learn whether this will work, with a tiny paid milestone.”

- Pricing architecture: Option sets (MVP vs full), retainers, and success criteria.

- Relationship work: Calm, specific answers that signal reliability and seniority.

When agencies try full automated bidding upwork without human oversight, reply quality drops and brand risk rises. Keep a person in the loop.

Want to see what this looks like in practice? Check our case study of 5 bookkeeping & accounting agencies that boosted PVR by 120% and LRR by 150% while securing $2.5M on Upwork.

Cost models: AI, VA, principal—what’s the real cost per proposal?

You don’t need exact platform numbers to reason about cost. Think in components:

- Connects + boosts: Your hard spend to compete for attention.

- Writing time: Internal cost of the person touching the draft.

- Tooling: Any AI or workflow tools you pay for.

AI-first model:

- Low writing time per draft; tool cost exists.

- Great for volume and for lanes with clear templates and repeatable proof.

VA-assisted model (you hire bidding VA Upwork):

- Moderate time per draft; higher consistency than founder-only; training cost at start.

- Excellent for triage, saved search upkeep, attaching correct samples, and scheduling follow-ups.

Principal-led model:

- Highest time per draft; best quality on complex, high-ticket scope; lowest volume.

- Ideal for enterprise-leaning posts, audits, and strategy retainers.

Most agencies land on a hybrid: AI + VA for intake and prep; principal or senior PM for final tailoring, pricing, and send. This reduces cost per qualified proposal while keeping win rates healthy.

Curious how to track whether your proposals actually pay off? Dive into our guide on Upwork proposal analytics to measure win rate, response rate, and real ROI.

What to automate (responsibly) and what to keep human

Automate (AI/Rules):

- Feed monitoring and deduping across upwork saved search.

- Job brief extraction: deliverables, stack, constraints, timeline hints, acceptance criteria candidates.

- Drafting first pass: a 180–220-word opener with placeholders for two job-specific details, a tiny plan, one proof, availability, and CTA.

- Portfolio matching: suggest the two best case snippets based on skills/industry tags.

- Follow-ups: schedule T+24h and T+72h nudges that add value (risk note, tiny roadmap).

Keep human:

- Go/No-Go calls on borderline jobs.

- Scoping & pricing (offer options, not a single number).

- Tone & details in the first two sentences and the micro-milestone.

- Ethics & platform compliance checks.

If you plan to hire bidding VA Upwork: a quick blueprint

- Scorecard: Must-haves (English clarity, process discipline, Upwork familiarity). Nice-to-haves (your stack/industry, light copy skills).

- SOP pack: 60-second triage, proposal skeleton, portfolio tagging rules, follow-up script, do/don’t list.

- Training loop: Shadow for one week, then “you draft—I review,” then “you draft & send within rules.”

- Quality gates: No send until two specifics are cited, a micro-milestone is present, and two matched samples are attached.

- Metrics: Responses, interviews, hires, and “proposal quality score” (a simple 1–5 rubric your senior reviews).

This gives structure whether you rely more on people or on the machine layer.

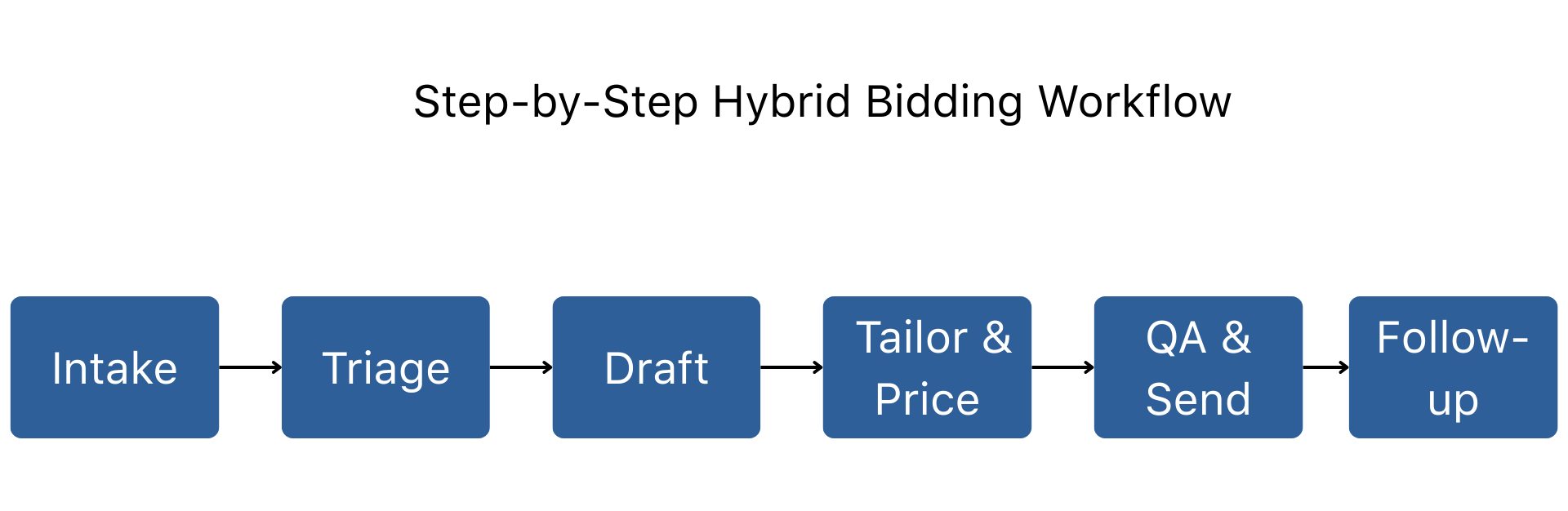

The hybrid pipeline (agency edition)

1) Intake (AI + filters).

Tight queries and negative keywords pull your lanes into a clean queue. AI summarizes each post as a 6–8 bullet brief.

2) Triage (VA/PM).

Apply a fast score (Fit, Scope clarity, Value, Client). P1 = now. P2 = later. Rest = archive.

3) Draft (AI).

Generate a proposal with your structure: two specifics, tiny plan, one proof, availability/overlap, CTA. Suggest two case snippets.

4) Tailor & price (Senior).

Rewrite the first two lines in the buyer’s language, choose the micro-milestone and acceptance criteria, set options/pricing.

5) QA & send (PM/VA).

Run the final checklist; attach exactly two matched samples; send; log.

6) Follow-up (AI-scheduled, human-approved).

T+24h: risk/mitigation note. T+72h: 2-slide roadmap offer. Keep it helpful, not pushy.

This resolves the false choice in ai vs human proposals upwork by giving each layer the work it does best.

Guardrails for responsible automated bidding upwork

- Human-in-the-loop: A person approves every send.

- Specificity or skip: If you can’t reference two concrete details from the post, don’t apply.

- Micro-milestone policy: Offer a small, paid first step (audit/spec/prototype) with clear acceptance criteria.

- No deception: Don’t claim tools, teams, or results you don’t have; don’t impersonate.

- Quiet hours & pacing: Avoid bursty behavior that looks spammy; cap proposals/day per seat.

- Log everything: So you can learn what’s working—and stop what isn’t.

Automation should assist the seller, not impersonate them or flood clients.

Positioning your agency inside the debate

Clients aren’t anti-automation; they’re anti-generic. If asked, be open: “We use an AI prep layer to move fast, then a senior editor scopes and prices every proposal. You’ll always get a specific plan, a realistic first milestone, and two samples that match your stack.” That reassurance flips skepticism into confidence and differentiates you from indiscriminate automated bidding upwork.

What to test (without turning this into a science project)

Pick one metric per week and one variable to change:

- Response rate: Alter your opener—“proof-first” vs “plan-first.”

- Interview rate: Add acceptance criteria to the micro-milestone.

- Win rate: Present options (MVP vs full) instead of a single price.

- Revenue per proposal: Focus a week on your highest-margin lane only.

- Time to response: Prioritize posts with <5 proposals and <60 minutes old.

Log the test tag on each proposal. Decide Friday: keep, tweak, or kill.

Sample proposal skeleton (hybrid-ready)

Subject: Practical plan for {{project_title}}—first milestone in {{timeframe}}

Opener (two specifics):

“Two details stood out: {{specific_1}} and {{specific_2}}. Here’s a straightforward way to de-risk delivery this week.”

Tiny plan (3 bullets):

- Confirm {{acceptance_criteria}} and deliverables in a quick kickoff.

- Build {{key component}} using {{stack}} with {{testing/QA}}.

- Share v1 by {{date}}, review, iterate.

Proof:

“We recently shipped {{similar_result}} for a {{industry}} team using {{stack}} (artifact: {{link}}).”

Logistics:

Available {{overlap_hours}}. Happy to do a 10-minute call or send a 2-slide plan today.

It’s short enough for mobile, structured enough for AI, and credible enough when a human sharpens the details.

Common failure modes (and simple fixes)

- Spray-and-pray drafts: Fix with a specificity rule and a daily cap.

- Weak micro-milestones: Add acceptance criteria (“Done = …”) and a 7–10 day window.

- Portfolio dumps: Limit to two matched samples; retire old, off-lane work.

- Over-automation: Require human sign-off; ban generic intros.

- No learning loop: Track proposals, responses, interviews, hires by lane; tune weekly.

These are small, boring habits—the kind that make pipelines predictable.

.png)

When a human-only approach still wins

- Enterprise-style RFPs and audits that demand deep scoping.

- Sensitive domains (regulated data, medical, finance) where trust and process matter most.

- Brand-critical creative where tone and references are half the sale.

- Negotiations involving multi-phase budgets, internal politics, and stakeholder wrangling.

For these, AI should prep, not speak. Your senior lead carries the room.

When to lean harder on AI (and maybe fewer people)

- High-volume micro-projects with a clear, repeatable template.

- Maintenance retainers where updates and small tasks recur weekly.

- Backlog cleanup when you have more P1 posts than humans can touch.

Even then, keep the human gate before send.

A 10-day rollout plan for agencies

Day 1–2: Document lanes, write the proposal skeleton, tag your top six case snippets.

Day 3: Wire up saved searches, alerts, and a triage board (New → P1/P2 → Draft → Review → Sent).

Day 4: Add the AI draft step; create a prompt with your voice rules, CTA, and micro-milestone examples.

Day 5: Create the sign-off checklist (two specifics, plan, proof, availability, two samples).

Day 6: Pilot with one seat (AI + senior review). Send 3–5 proposals.

Day 7: Review outcomes; refine prompt and checklist.

Day 8: Bring in a VA; train on triage and attachments; forbid sending without sign-off.

Day 9–10: Scale to two seats; set a weekly review for metrics and prompt updates.

In under two weeks you’ll have a calm, repeatable machine that blends speed with judgment.

Final thoughts

The “upwork ai bidder vs human” debate is a false binary. Agencies win by assigning the right work to the right layer: AI for speed and structure, humans for judgment and trust. Whether you hire bidding VA Upwork, keep everything in-house, or lean on an AI-heavy stack, anchor your process in specificity, a tiny paid first milestone, and two relevant proofs. Use automated bidding upwork to cover ground, but keep your finger on the quality dial. Do that, and your pipeline gets faster, calmer, and—most importantly—more predictable.

.avif)

.png)

.webp)