Manual (human-first): You (or a teammate) read every post, qualify it, write a from-scratch message, and track outcomes in a sheet or CRM.

Automated (tool-assisted): Software gathers and filters jobs, drafts proposals from templates or AI, and nudges your pipeline. You still decide and send.

This isn’t either/or. The real question isn’t “robots or people,” it’s upwork bidding automation vs human for each step of the funnel: discovery, qualification, drafting, and follow-up.

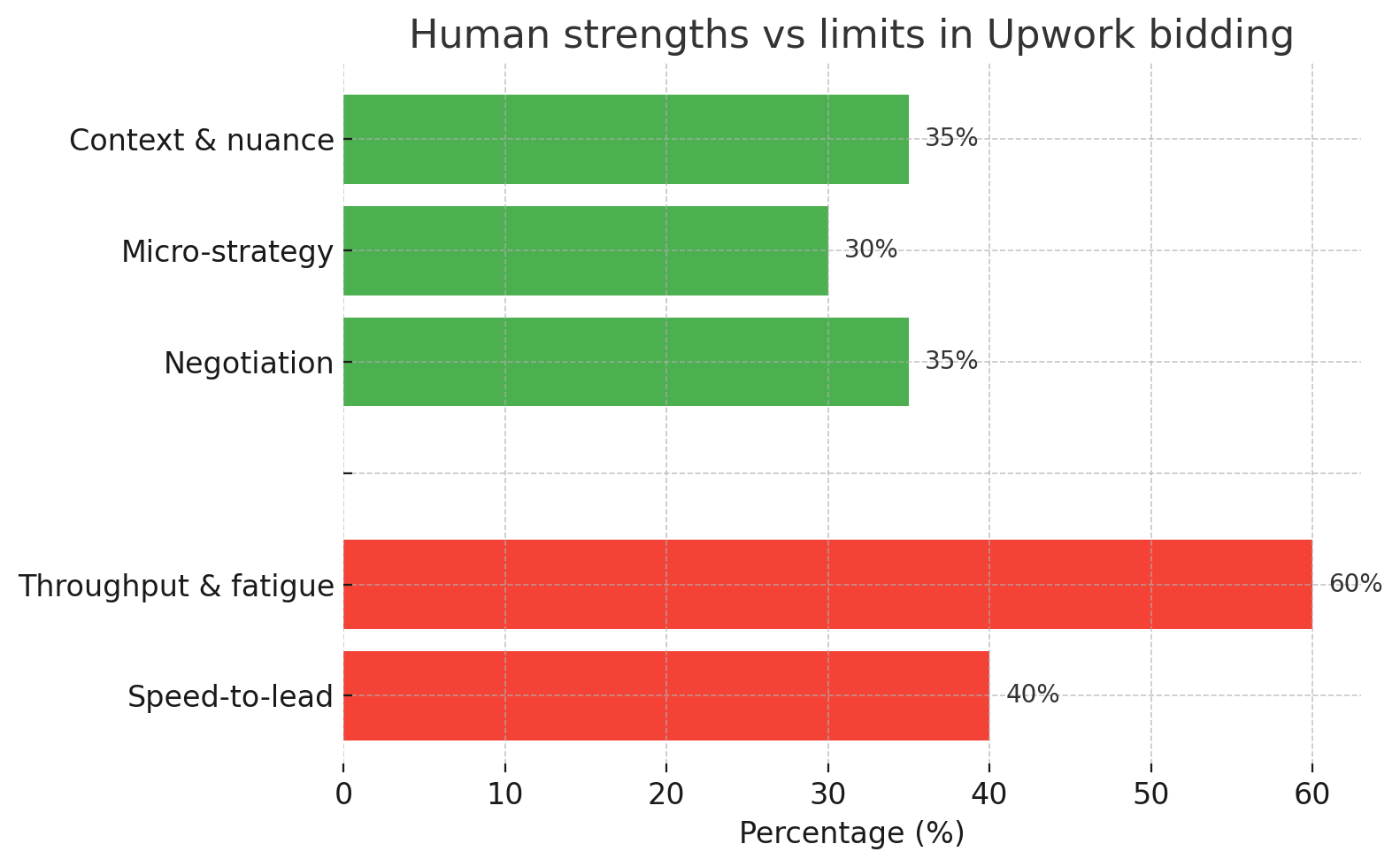

Where humans shine (and where they don’t)

Human strengths

- Context & nuance: Interpreting vague briefs, spotting red flags, and phrasing in the client’s voice.

- Micro-strategy: Choosing the right proof and carving a tiny “first mile” that reduces risk.

- Negotiation: Options framing (fixed vs hourly, swap vs add) and tone on calls.

Human limits

- Throughput & fatigue: Hard to keep quality steady across 10+ proposals/day.

- Speed-to-lead: Humans can’t watch feeds 24/7 or respond the instant a great post appears.

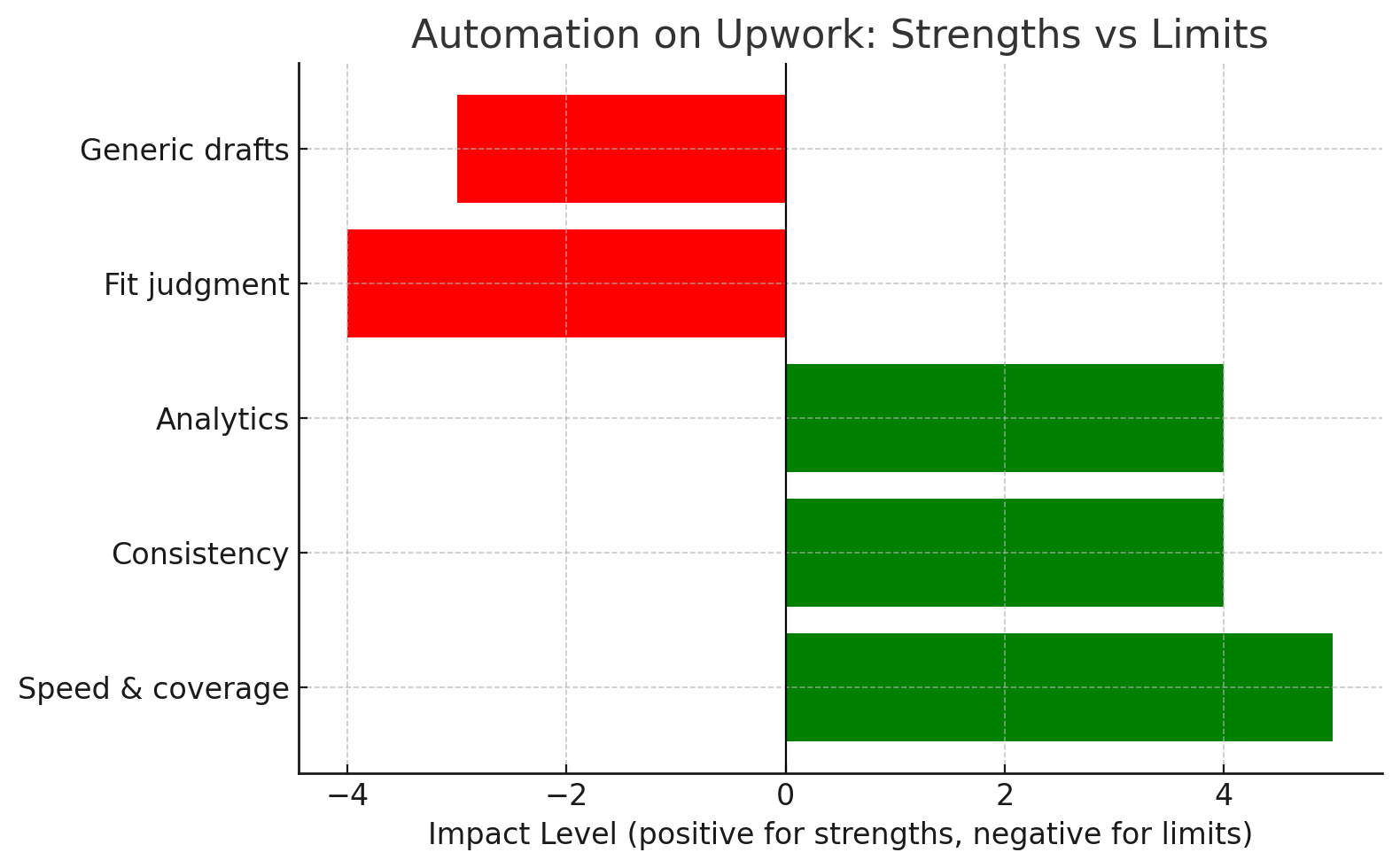

Where automation shines (and where it doesn’t)

Automation strengths

- Speed & coverage: Real-time alerts, deduped feeds, and P1/P2 routing.

- Consistency: Re-usable skeletons that keep the message tight and phone-friendly.

- Analytics: Clean metrics on reply, shortlist, win, and revenue per proposal.

Automation limits

- Fit judgment: Tools misread tone, scope traps, or budget tells.

- Generic drafts: Without curation, outputs sound samey and erode trust.

The winner across most lanes is human-in-the-loop. Think of tools as power steering, not autopilot.

If you want a real-world example of how this looks in action, check out how an AI-automation agency achieved an 8.6× ROI on Upwork with GigRadar

Cost model: what you’re really paying for

Manual

- Time to find + read + qualify: 5–10 minutes/post if your feed is clean.

- Time to draft + attach proof: 10–20 minutes when starting from scratch.

- Follow-up touches: ~5 minutes per thread over a week.

Multiply by your effective hourly rate (or a VA rate) and you have a baseline cost per proposal.

Automated

- Tools: subscription(s) for alerts, AI drafts, and analytics.

- Setup & maintenance: creating templates, negative keywords, and proof vault.

- Human review: 3–7 minutes to qualify, personalize, and send (still required).

Bottom line: If automation doesn’t cut human minutes per quality proposal by at least one-third or raise reply/shortlist materially, it’s overhead.

Speed model: why timing matters (and when it doesn’t)

Fresh posts with “<5 proposals” behave differently: buyers shortlist quickly and stop reading when they feel safe. For time-sensitive lanes (bug fixes, migrations, performance), being in the first hour with a precise plan noticeably lifts replies. For consultative work (strategy, data, brand), speed helps less than clarity.

Practical rule: Route P1 fits to real-time and P2 fits to digest windows. That alone balances speed and quality and is the backbone of scaling proposals upwork responsibly.

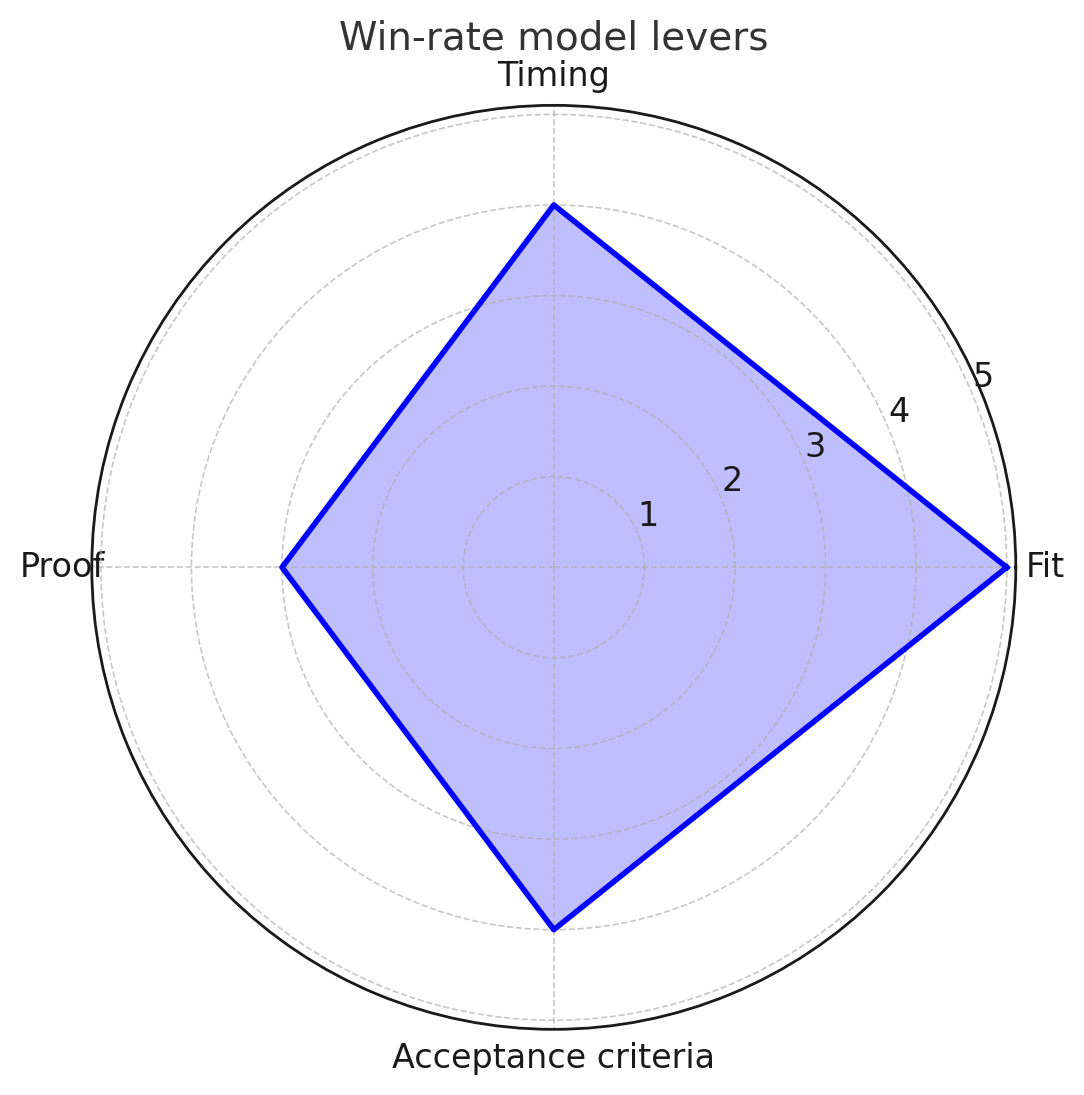

Win-rate model: the four levers that decide outcomes

- Fit: Lane + stack + budget + buyer signal.

- Timing: Minutes from post to send, and responsiveness after the buyer replies.

- Proof: One perfectly matched artifact (Loom or before/after).

- Acceptance criteria: A micro-milestone the buyer can approve quickly:

- Done = the client’s outcome in their words

- Evidence listed (PR link, screenshots, Loom, report)

- Duration (3–7 days for first mile)

- Done = the client’s outcome in their words

Automation can help with 2–4; humans must own #1.

“bidding va vs ai”: who should do what?

Use a VA for

- Setting up and maintaining saved searches, negative keywords, and budget floors

- Pre-triaging posts against your ICP and rules of engagement

- Pasting the right skeleton and pulling the one best artifact

- Logging metrics and scheduling follow-ups

Use AI for

- Condensing the job post into goal/constraints/risks

- Drafting a phone-length opener (150–220 words) that includes two specifics, Done = …, and a binary CTA

- Suggesting the acceptance criteria phrased in buyer language

You (or a senior closer) decide

- Bid or skip

- The precise micro-milestone and price

- How to frame options (fixed first mile, discovery slice, or hourly cap)

- Live calls and negotiation

This division answers bidding va vs ai cleanly and keeps quality high.

Manual vs automated Upwork bidding by lane

Web dev (bug/perf/migrations):

- Manual vs automated upwork bidding verdict: hybrid. Automation for alerts; human for scope/risks and proof selection.

- Stack: strict P1 feeds, AI draft, human edit, fast send.

UI/UX & product design:

- Automation for discovery and draft; human for tone and tiny mock/plan.

- Follow-ups matter more than minute-one speed.

SEO & content:

- Automation for noise control and brief summaries; human for outline-first thinking and promise clarity.

- Track RPP; slow-but-right wins here.

Data/AI:

- Heavily human. Automation for structure and math, human for calibration/interpretability framing.

Mobile:

- Hybrid. Use automation for speed on stability fixes; human for TestFlight/Play Internal steps and acceptance criteria.

A simple ROI worksheet (copy this logic)

For each approach (manual, automated, hybrid), estimate:

- Cost per proposal (CPP): time × rate + tool share

- Reply rate (RR)

- Shortlist rate (SR)

- Win rate (WR)

- Average initial deal value (V)

Then compute:

- EV per proposal = RR × SR × WR × V

- Net = EV − CPP

Run this side-by-side for manual vs automated upwork bidding. If automation doesn’t lift Net after 20–40 proposals, adjust inputs (filters, templates, proof) before adding more spend.

The hybrid SOP (the setup most agencies keep after testing)

- Saved searches tuned to ICP: include must-have skills and outcomes; add negatives (-homework -essay -unpaid -free).

- Routing: P1 to real-time; P2 to two dailies.

- AI first draft: 150–220 words with two specifics, Done = …, one proof, and a choice-based CTA.

- Human edit: price, nuance, and acceptance criteria in buyer words.

- Follow-up sequence:

- T+24h value add (risk + mitigation)

- T+72h mini-asset (2-slide plan or 60–90s Loom)

- T+7d close-the-loop note

- T+24h value add (risk + mitigation)

- Analytics: reply, shortlist, win, time-to-first-reply, revenue per proposal; keep only variants that beat baseline.

That’s upwork bidding automation vs human in practice—dividing work where each excels.

Curious how the engine behind those results actually works?

Here’s a behind-the-scenes look at how GigRadar works — from real-time job discovery to analytics that guide every follow-up.

Templates that make automation worth it (plug-and-play)

Plan-first opener (universal)

Two details stood out: {{specific_1}} and {{specific_2}}. I’d start with a {{3–5}}-day slice so you see progress fast: Done = {{acceptance_criteria}}.

Recent: {{result}} for a {{industry}} project (60–90s Loom). I’m {{timezone}} with {{overlap}} overlap. Prefer a 10-minute call, or I can send a 2-slide plan today.

Proof-first (performance/design)

Shipped {{similar_result}} recently (short Loom). For your case, the first step is {{micro_step}} — Done = {{criteria}} this week. Call or 2-slide plan?

Follow-up (T+24h value add)

Quick note on {{their detail}}: safest win is {{micro_step}} — Done = {{criteria}}. I’ll include {{artifact}} so validation’s simple. Call or async plan?

Automation can fill the braces; a human locks the fit.

Governance: policy-safe automation

Use tools to assist, not impersonate. Keep a person in the loop for every proposal and message, avoid spammy volume, and never bypass rate limits or share credentials with extensions you don’t trust. Responsible workflows protect your account and brand while scaling proposals upwork.

A/B tests worth running (lightweight, high signal)

- Opener style: plan-first vs proof-first

- CTA: call vs 2-slide plan

- Asset type: Loom vs before/after screenshot

- Length: ~170 vs ~230 words

- Timing: <60 minutes vs 2–4 hours after posting (P1 lanes)

Keep the winner only if it lifts reply or shortlist by ~20% without hurting wins.

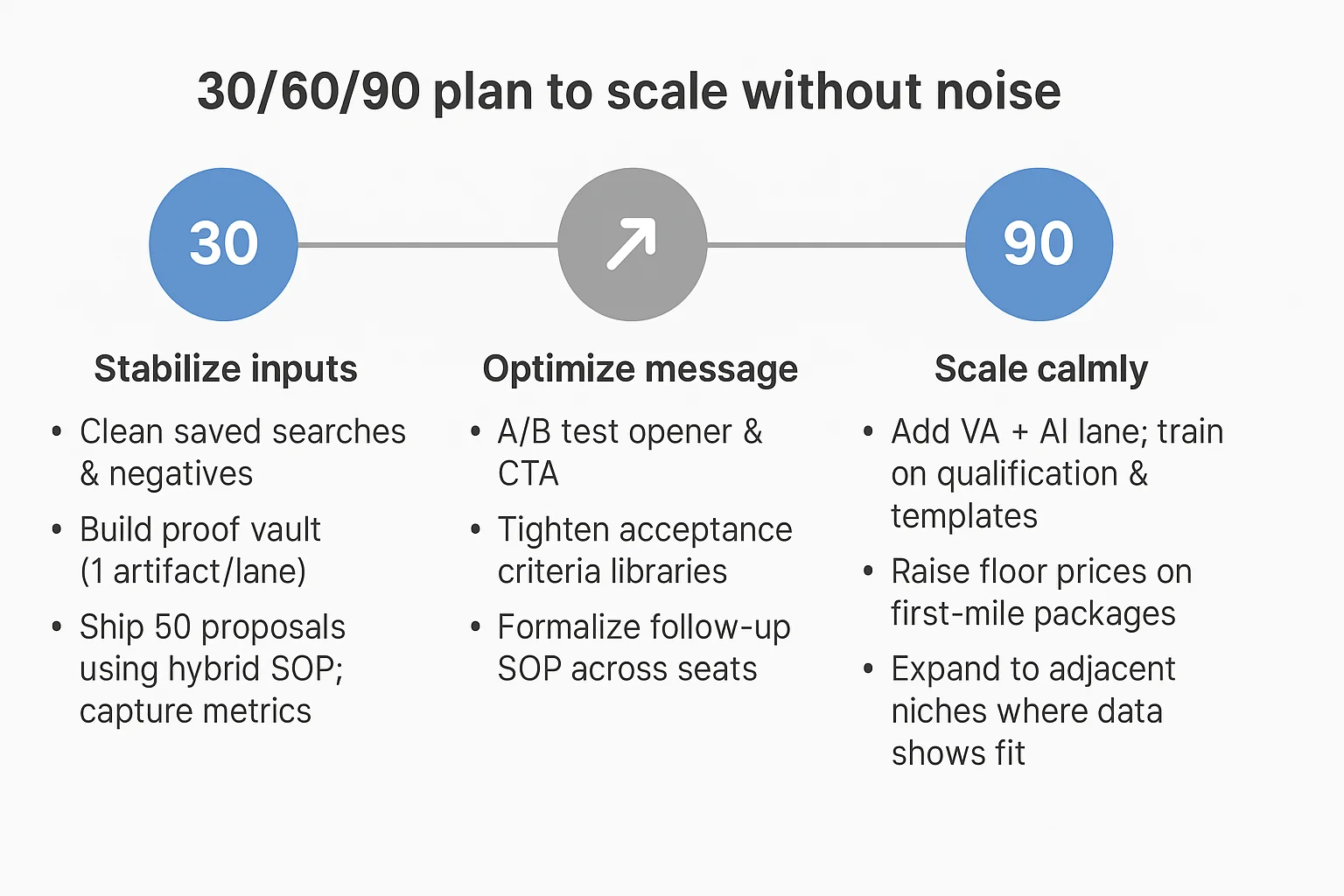

30/60/90 plan to scale without noise

30 days (stabilize inputs)

- Clean saved searches and negatives

- Build a proof vault (one artifact per lane)

- Ship 50 proposals using the hybrid SOP; capture metrics

60 days (optimize message)

- A/B test opener and CTA

- Tighten acceptance criteria libraries

- Formalize follow-up SOP across seats

90 days (scale calmly)

- Add a VA + AI lane; train on qualification and templates

- Raise floor prices on first-mile packages

- Expand to adjacent niches only where data shows fit

This is sustainable scaling proposals upwork without sacrificing quality.

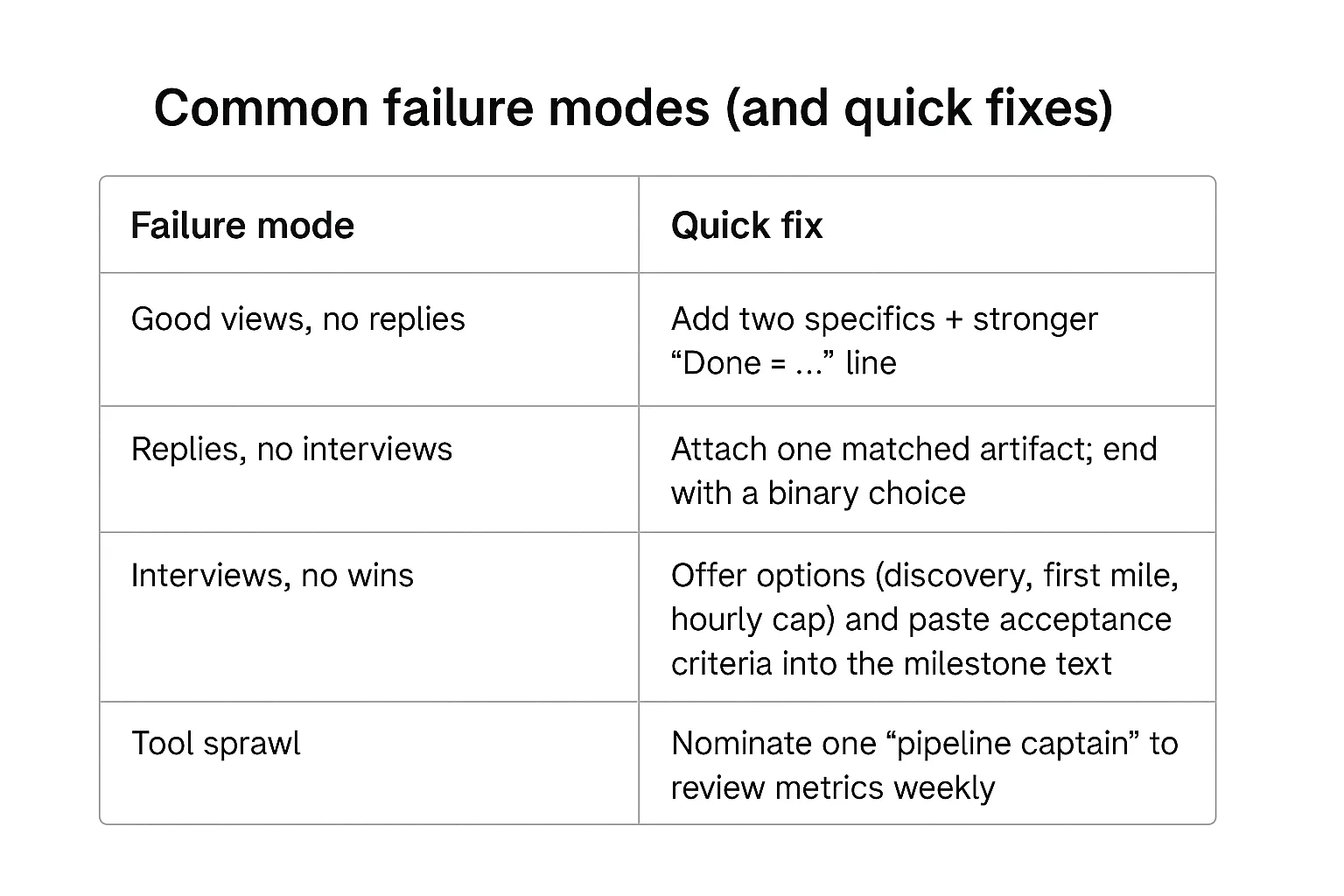

Common failure modes (and quick fixes)

- Good views, no replies: Drafts sound generic. Fix with two specifics + stronger Done = … line.

- Replies, no interviews: Your proof/CTA is weak. Attach one matched artifact; end with a binary choice.

- Interviews, no wins: Packaging unclear. Offer options (discovery, first mile, hourly cap) and paste acceptance criteria into the milestone text.

- Tool sprawl: Too many dashboards, no owner. Nominate one “pipeline captain” to review metrics weekly.

Final thoughts

The debate around manual vs automated upwork bidding misses the point. Winning agencies don’t pick a side—they design a human-in-the-loop system where automation scouts and drafts, a VA enforces process, and a closer shapes value and tone. That’s how upwork bidding automation vs human becomes a collaboration, not a contest; how bidding va vs ai turns into clear roles; and how scaling proposals upwork stops meaning “more noise” and starts meaning “more wins.” Build the hybrid stack, measure the four levers, and iterate weekly. Your calendar—and your margins—will show you it’s working.

.avif)

.png)

.webp)